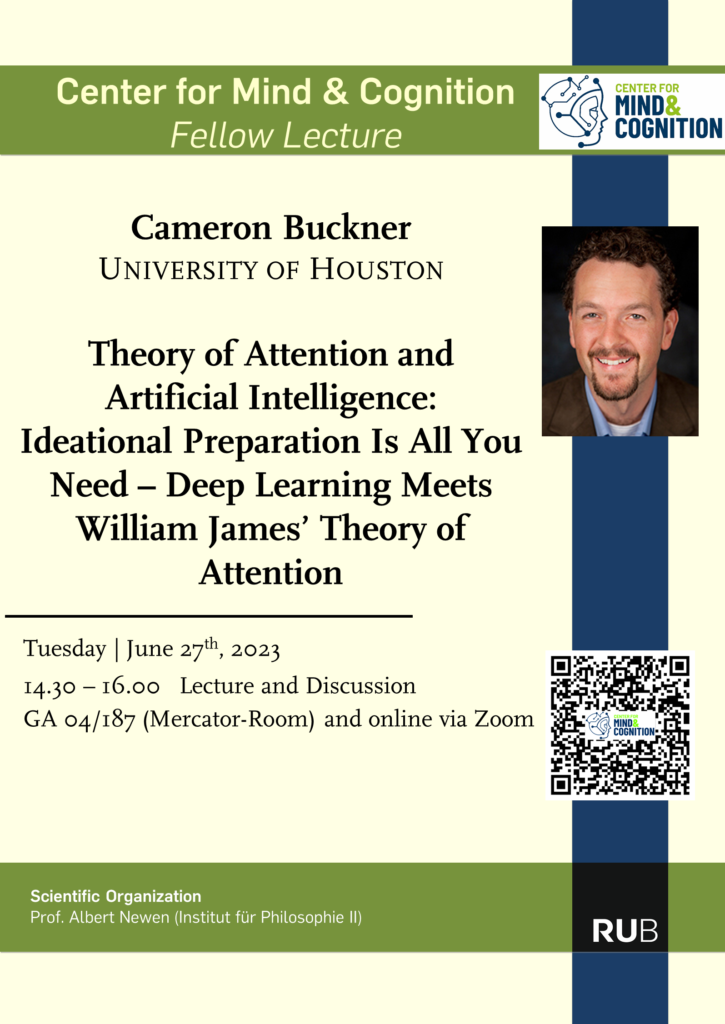

Theory of Attention and Artificial Intelligence: Ideational Preparation Is All You Need – Deep Learning Meets William James’ Theory of Attention

Tuesday, 27.6.2023: 14.30-16.00 Uhr,

Location (hybrid): GA 04/187 Mercatorraum und online via Zoom:

https://us02web.zoom.us/j/89147315543?pwd=aTNPMzdJdkhZbFluUXJKeHp5emVNQT09

Prof. Cameron Buckner, University of Houston (USA)

Fellow-Lecture

Theory of Attention and Artificial Intelligence: Ideational Preparation Is All You Need – Deep Learning Meets William James’ Theory of Attention

Abstract: Deep learning is a research area in computer science that has over the last ten years produced a series of transformative breakthroughs in artificial intelligence—creating systems that can recognize complex objects in natural photographs as well or better than humans, defeat human grandmasters in strategy games such as chess, Go, or Starcraft II, create bodies of novel text that sometimes are indistinguishable from those produced by humans, and predict how proteins will fold more accurately than human microbiologists who have devoted their lives to the task. The artificial neural network approach behind deep learning is usually aligned with empiricist theories of the mind, which can be traced back to philosophers such as Locke and Hume.

Contemporary rationalists like Gary Marcus and Jerry Fodor have criticized the innovations behind some of these breakthroughs, because they appeal to innate structure which is supposed to be off-limits to empiricists. I argue that these innovations are consistent with historical empiricism, however, as they implement roles attributed to domain-general psychological faculties like perception, memory, imagination, and attention, which were frequently invoked by paradigm empiricists in their explanations of the mind’s ability to extract abstractions from sensory experience. Computer scientists may benefit by reviewing these philosophers’ accounts of these faculties, for they anticipated many of the coordination and control problems that will confront deep learning theorists as they aim to bootstrap their models to greater levels of cognitive complexity using more ambitious

architectures with multiple interacting faculty modules. In this talk, I focus on William James’ account of attention in the Principles of Psychology by comparing the roles he assigned to attention in the extraction of abstract knowledge from experience to the innovations behind many recent architectures in deep learning. Despite numerous alignments, I argue that deep learning still has much to gain by considering other aspects of James’ theory which have not yet been fully implemented, especially the “ideational preparation” component of his theory, which aligns more naturally with predictive processing accounts of cognition.